Alright, so this week we've gone through the following shading techniques:

Emissive: emits light or simulates light originating from an object.

(http://udn.epicgames.com/Three/rsrc/Three/Lightmass/SmallMeshAreaLights.jpg)

(http://www.directxtutorial.com/Lessons/9/B-D3DGettingStarted/3/17.png)

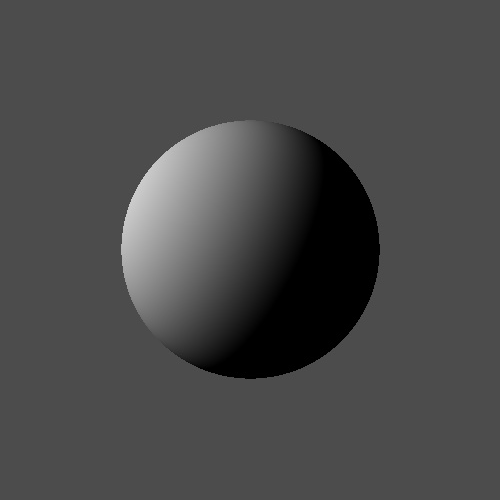

Ambient: Basically, a really simply hack to simulate light bouncing around or "light that is always there". It is lighting that lights up the model even when there are no lights around.

(http://zach.in.tu-clausthal.de/teaching/cg_literatur/glsl_tutorial/images/dirdiffonlyvert.gif)

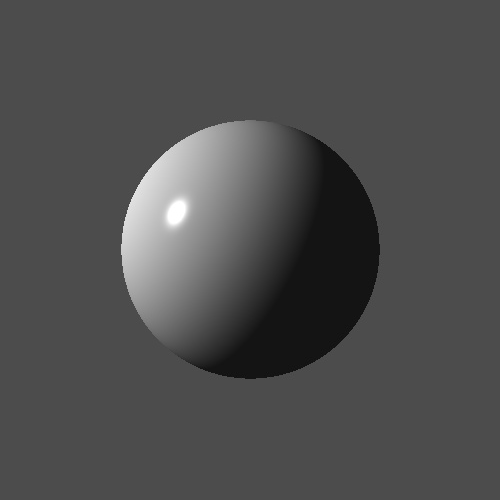

Specular: it shows a bright spot of light simulating light reflection. It is often used to give surfaces a metallic or reflective look.

(http://www.directxtutorial.com/Lessons/9/B-D3DGettingStarted/3/19.png)

Also, the last 3 techniques are combined to make the "phong" shading model

(http://tomdalling.com/wp-content/uploads/800px-Phong_components_version_4.png)

By adding all of these components together, we get a nice reflection which is a basis for most lighting techniques.

The math...

To perform light calculations you'll need components like a vector's normal vector, light source position, view direction, reflected light vector (there's way more but these are just the most common ones).

Diffuse is done by taking the dot product of the normal and light direction vectors. This will give you an intensity from 0 to 1 which you multiply with your final color. values closer to 0 will give a darker color while values closer to 1 will give you a brighter color.

so it should look like : DiffuseLight = N dot L * DiffuseIntensity;

and of course you can factor in a intensity variable shown above to adjust how bright it would be.

Specular is done by obtaining the reflected light vector then getting the dot product of the view direction and reflected light vector. Once our view direction gets closer to the reflected light, the value becomes closet to 1, which is how we get that spot of light on a surface.

SpecularLight = pow((R dot V), SpecularShininess);

in this one you can factor in a shininess variable, and set the specular value to the power of a certain value to intensify it.

Ambient is the easiest of them all, all you'll need to do is hard code in a set color value like (0.1,0.1,0.1) and you're done! this allows the object to be lit in areas where there is no light present.

AmbientLight = vec3(0.1,0.1,0.1);

then you just add them all together to get the final color.

FinalColor = AmbientLight + DiffuseLight + SpecularLight;

We've also covered toon/cel shading which is pretty interesting..

(http://dailyemerald.com/wp-content/uploads/2013/09/Wind-Waker-Windfall.jpg)

Toon shading isn't really difficult, you're just doing the same shading techniques like the ones mentioned earlier however the final color values are clamped into specified ranges, giving that cartoony effect.

Now back on the development side...

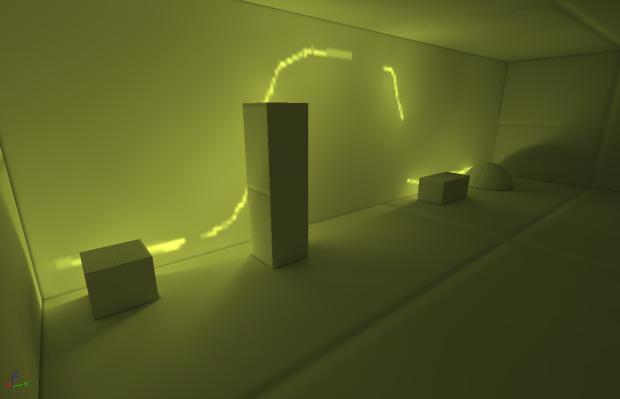

We really need nice glow and holographic effects for our game since it takes place in a vibrant night city.

I've done a bit of research and came across this article

(http://www.gamasutra.com/view/feature/2107/realtime_glow.php)

after reading it, I found it to be actually pretty simple. I've come up with a few ideas...

Here's what I did.

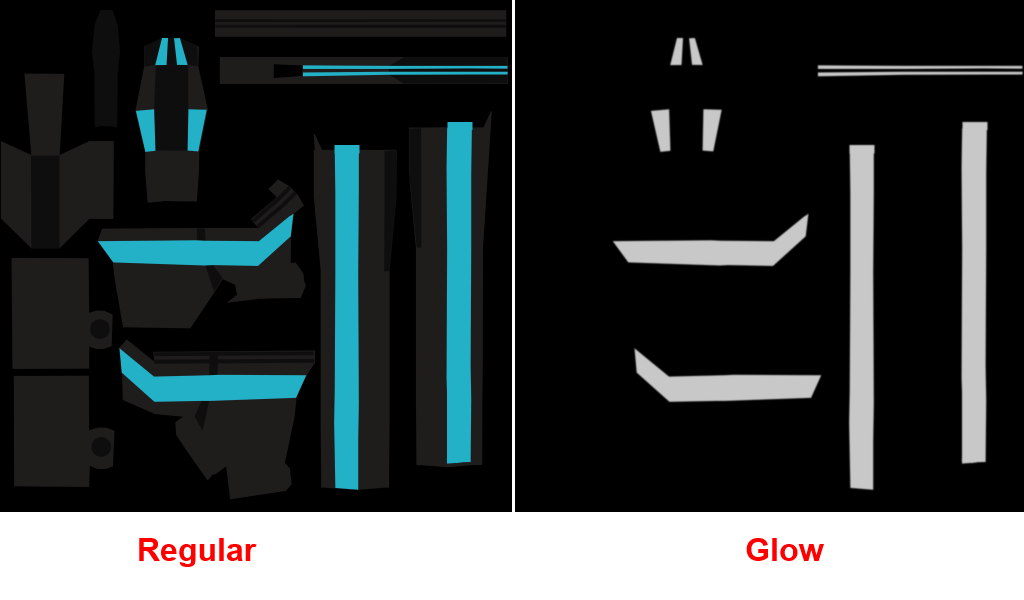

Have 2 different types of textures, 1 regular texture and 1 glow texture.

(Kevin Pang's shotgun texture)

(for glow texture, all black pixels won't glow)

Create multiple frame buffers to store the glow and blurring post processing effects.

This will need multiple draw passes

Pass 1: render all geometry with the glow texture applied

Pass 2: Ok now blur everything using gaussian blur (this step actually takes 2 passes to blur things horizontally then vertically)

Pass 4: Render objects normally with normal textures

Pass 5: Apply the final glow frame texture onto the regular scene frame and BAM

My group members including myself agree that it makes things pop a lot more, and I'm quite happy with this effect.

(hologram test lol)

I'll be looking forward to using this effect in my night city. Well, that's all for this week, later!