For a long time I have been amazed and captivated by the unimaginably beautiful art style of TGC's playstation 3 title "Journey". The game features a robed figure that players control to explore different types of terrain in different climates and the purpose is to reach the peak of the mountain seen in the distance. The gameplay mechanics mainly revolve around simple platforming and collecting pieces of scarves.

It was one of the few games I have come across which art style and graphics had brought so much emotion to a player. To me it felt like a disney movie, the design that went into this game was truly beautiful.

So I wanted to look up how the rendering was done for terrain and I've come across several sources including a power point presentation from TGC.

And not surprisingly, the process takes several steps.

- Heightmaps

There are actually 3 types height maps used. A single 256x512 artist generated image was used for generating the terrain, this was done by using B-Spline interpolation to smooth out the hills during real time. I found this to be a really clever way to avoid using a massive resolution height map as it could take up a lot of space. Detail height maps were used to create the ripples in the sand, various types of tiled maps were interpolated between to give the terrain more unique details. And a 3rd type of height map was used to create sand waves.

- Diffuse Contrast

A modified version of the Lambertian model was used in the final product, the Oren-Nayar model helped bring out more contrast out of the terrain.

this is the shader code used:

this is the shader code used:

half OrenNayarDiffuse(

half3 light, half3 view, half3 norm, half roughness )

{

half VdotN = dot( view, norm );

half LdotN = dot( light, norm );

half cos_theta_i = LdotN;

half theta_r = acos( VdotN );

half theta_i = acos( cos_theta_i );

half cos_phi_diff = dot( normalize( view - norm * VdotN ),

{

half VdotN = dot( view, norm );

half LdotN = dot( light, norm );

half cos_theta_i = LdotN;

half theta_r = acos( VdotN );

half theta_i = acos( cos_theta_i );

half cos_phi_diff = dot( normalize( view - norm * VdotN ),

normalize( light -

norm * LdotN )

);

half alpha = max( theta_i, theta_r ) ;

half beta = min( theta_i, theta_r ) ;

half sigma2 = roughness * roughness;

half A = 1.0 - 0.5 * sigma2 / (sigma2 + 0.33);

half B = 0.45 * sigma2 / (sigma2 + 0.09);

return saturate( cos_theta_i ) *

half alpha = max( theta_i, theta_r ) ;

half beta = min( theta_i, theta_r ) ;

half sigma2 = roughness * roughness;

half A = 1.0 - 0.5 * sigma2 / (sigma2 + 0.33);

half B = 0.45 * sigma2 / (sigma2 + 0.09);

return saturate( cos_theta_i ) *

(A + (B * saturate( cos_phi_diff ) *

sin(alpha) * tan(beta)));

}

}

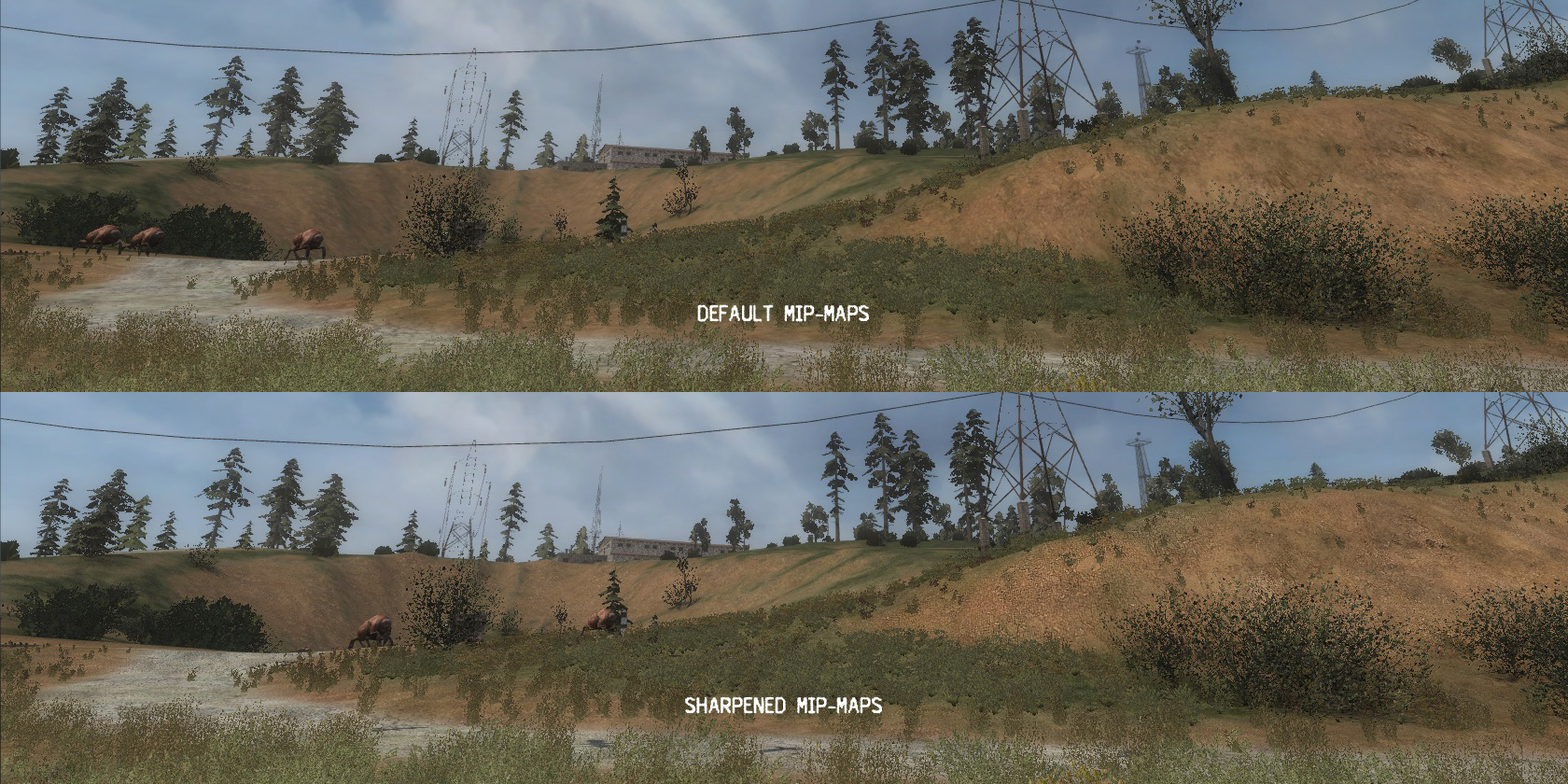

- Sharpened mip-maps

One issue with mip-mapping is that objects or terrain further in the distance begin to lose a lot of detail and have more of a smoother look. The sand in Journey needs to have more of a grainy look, so sharpened mip-maps were used to bring out the detail and texture of the sand in further distances.

Sharp mip-maps off

Sharp mip-maps on

- Specular glitter

When I first saw the game, what stood out to me the most was the glittering sand effect. Naturally, sand is comprised of rocks and minerals that reflect like sharp crystals shining specular sun light into our eyes giving that glitter effect.

Most of the sand texture was derived from noise normal maps. This was used bring out the specular detail of individual grains of sand.

- Anisotropic masking

The effect had an issue though, some parts of the terrain had an unusual concentration of glitter which gave an unnatural feel.

To solve this, a mip-mapped texture containing values based on distances from the camera filters out the inappropriate specular highlights.

And that's most of it, the rest include fluid simulation, a dust system and bloom.

This is my 10th and final post and I would like to mention that I've learned so much over the past year. Computer graphics is truly amazing and you don't need to be a mathematician to do it, games like Journey have inspired me to really dive into it. This year I didn't really have too much time as I liked on shaders, building a 3D game from scratch and incorporating all of the functionality was a challenge. However, next year we get to use a pre-built game engine and I definitely plan to give shaders a lot of attention and create a beautiful game.

Thank you for reading :)